Microsoft Phi-3 is the tech giant’s next tiny titan

The race for ever-larger artificial intelligence models has been a hallmark of recent advancements. However, Microsoft is shaking things up with the Phi-3 Mini, an AI model boasting impressive capabilities despite its compact size.

Traditionally, success in large language models (LLMs) has been linked to the number of parameters – essentially the building blocks that inform the model’s understanding of language.

Taking computing costs and availability for everyone into account, Microsoft Phi-3 is here to change this “traditional” approach.

Microsoft Phi-3 Mini fits a giant into your pocketsGPT-3.5, a current frontrunner in the LLM race, boasts a staggering 175 billion parameters. This immense number allows GPT-3.5 to process vast amounts of text data, giving it a broad and nuanced understanding of language. However, this complexity also comes with a cost. Running a model with such a high parameter count requires significant computational resources, making it expensive and power-hungry.

Microsoft’s mini model, on the other hand, takes a different approach. By utilizing a much smaller set of parameters – a mere 3.8 billion – the Phi-3 Mini operates with a level of efficiency that dwarfs its larger counterparts. This reduction in size translates to several advantages.

All these make Microsoft Phi-3:

- Significantly less expensive to run

- A potential powerhouse for on-device AI applications

Another intriguing aspect of Phi-3 Mini is its training method.

Unlike its larger counterparts trained on massive datasets of text and code, Phi-3 Mini’s education involved a more curated selection. Researchers opted for a curriculum inspired by how children learn – using children’s books as a foundation.

This approach seems to have yielded positive results, with Phi-3 Mini demonstrating performance rivaling that of GPT-3.5 on several benchmarks.

With only 3.8 billion parameters, Phi-3 Mini outperforms larger models in terms of cost-effectiveness and on-device performance (Image credit)

Benchmarked for success

With only 3.8 billion parameters, Phi-3 Mini outperforms larger models in terms of cost-effectiveness and on-device performance (Image credit)

Benchmarked for success

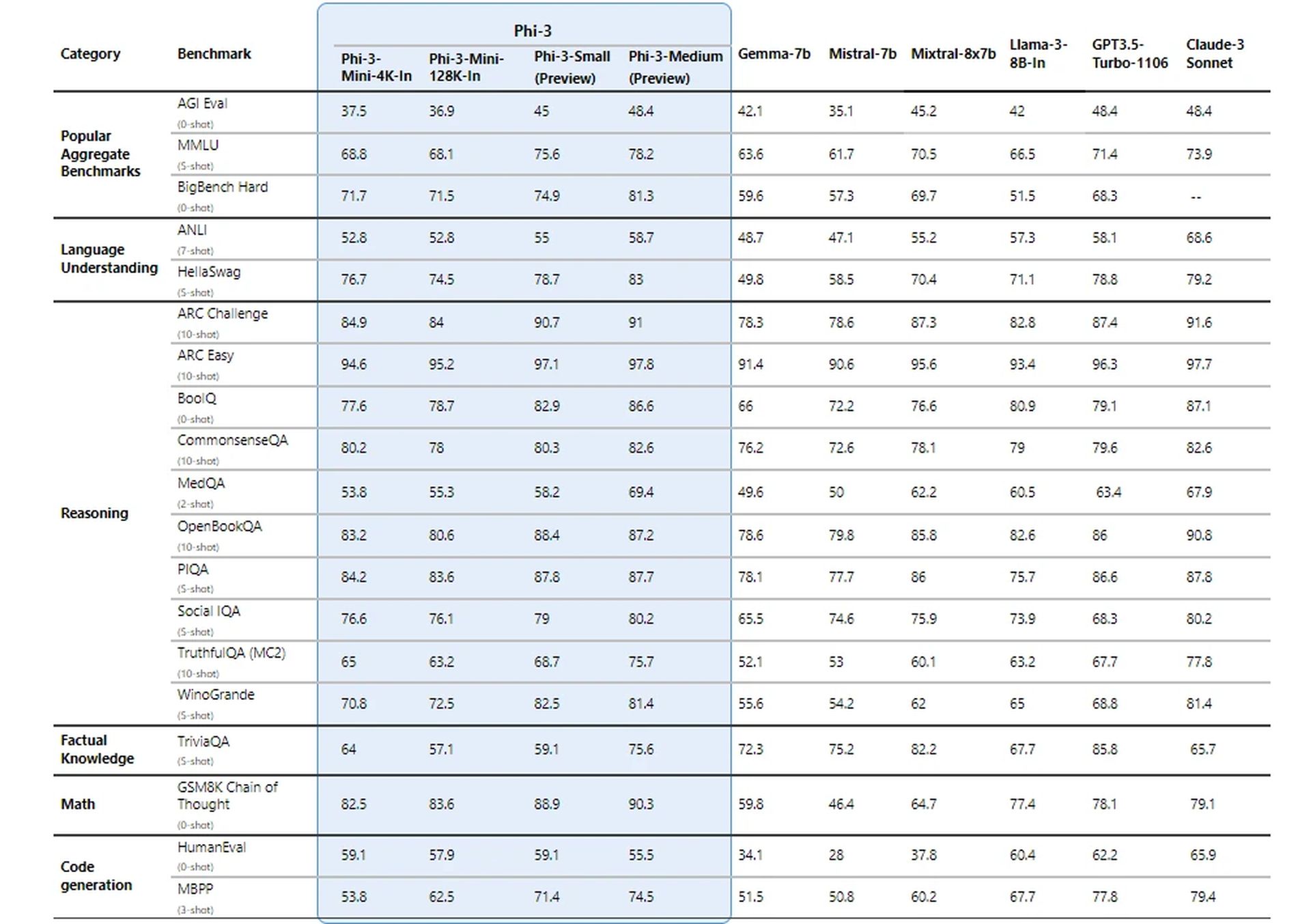

Microsoft researchers put its new model through its paces using established benchmarks for LLMs. The model achieved impressive scores on metrics like MMLU (a measure of a model’s ability to understand complex relationships in language) and MT-bench (a test for machine translation capabilities).

These results suggest that Phi-3 Mini, despite its size, can compete with the big names in the LLM game.

How does it achieve such impressive results?The technical details of Phi-3 Mini reveal a fascinating approach to achieving impressive results with a remarkably small model size. Here’s a breakdown of the key aspects:

Transformer decoder architecturePhi-3 Mini utilizes a transformer decoder architecture, a prevalent design choice for effective language models. This architecture excels at processing sequential data like text, allowing the model to understand the relationships between words in a sentence.

Context lengthThe standard Phi-3 Mini operates with a context length of 4,000 tokens. This defines the maximum number of tokens (words or parts of words) the model considers when generating text. A longer context length allows for a deeper understanding of the preceding conversation but also requires more processing power.

Long context version (Phi-3-Mini-128K)For tasks requiring a broader context, a variant called Phi-3-Mini-128K is available. This version extends the context length to a whopping 128,000 tokens, enabling it to handle more complex sequences of information.

Compatibility with existing toolsTo benefit the developer community, Phi-3 Mini shares a similar structure and vocabulary size (320,641) with the Llama-2 family of models. This compatibility allows developers to leverage existing tools and libraries designed for Llama-2 when working with Phi-3 Mini.

Model parametersHere’s where Phi-3 Mini truly shines. With only 3.072 billion parameters, it operates far below the staggering numbers seen in larger models like GPT-3.5 (175 billion parameters).

This significant reduction in parameters translates to exceptional efficiency in terms of processing power and memory usage.

Microsoft’s Saif Naik explains:

“Our goal with the Krishi Mitra copilot is to improve efficiency while maintaining the accuracy of a large language model. We are excited to partner with Microsoft on using fine-tuned versions of Phi-3 to meet both our goals—efficiency and accuracy!”

– Saif Naik, Head of Technology, ITCMAARS

Training methodologyPhi-3 Mini’s training draws inspiration from the “Textbooks Are All You Need” approach. This method emphasizes high-quality training data over simply scaling up the model size. The training data is carefully curated, focusing on web sources with a specific “educational level” and synthetic data generated by other LLMs.

This strategy allows Phi-3 Mini to achieve impressive results despite its compact size.

Data filtering for optimal learningUnlike traditional approaches that prioritize either computational resources or excessive training, Phi-3 Mini focuses on a “data optimal regime” for its size. This involves meticulously filtering web data to ensure it contains the right level of “knowledge” and promotes reasoning skills.

For instance, general sports data might be excluded to prioritize information that improves the model’s ability to reason.

Post-training fine-tuningAfter the core training process, the new model undergoes additional refinement through supervised fine-tuning (SFT) and direct preference optimization (DPO). SFT exposes the model to curated data across various domains, including math, coding, and safety principles. DPO helps steer the model away from unwanted behavior by identifying and excluding undesirable outputs.

This post-training phase transforms Phi-3 Mini from a language model into a versatile and safe AI assistant.

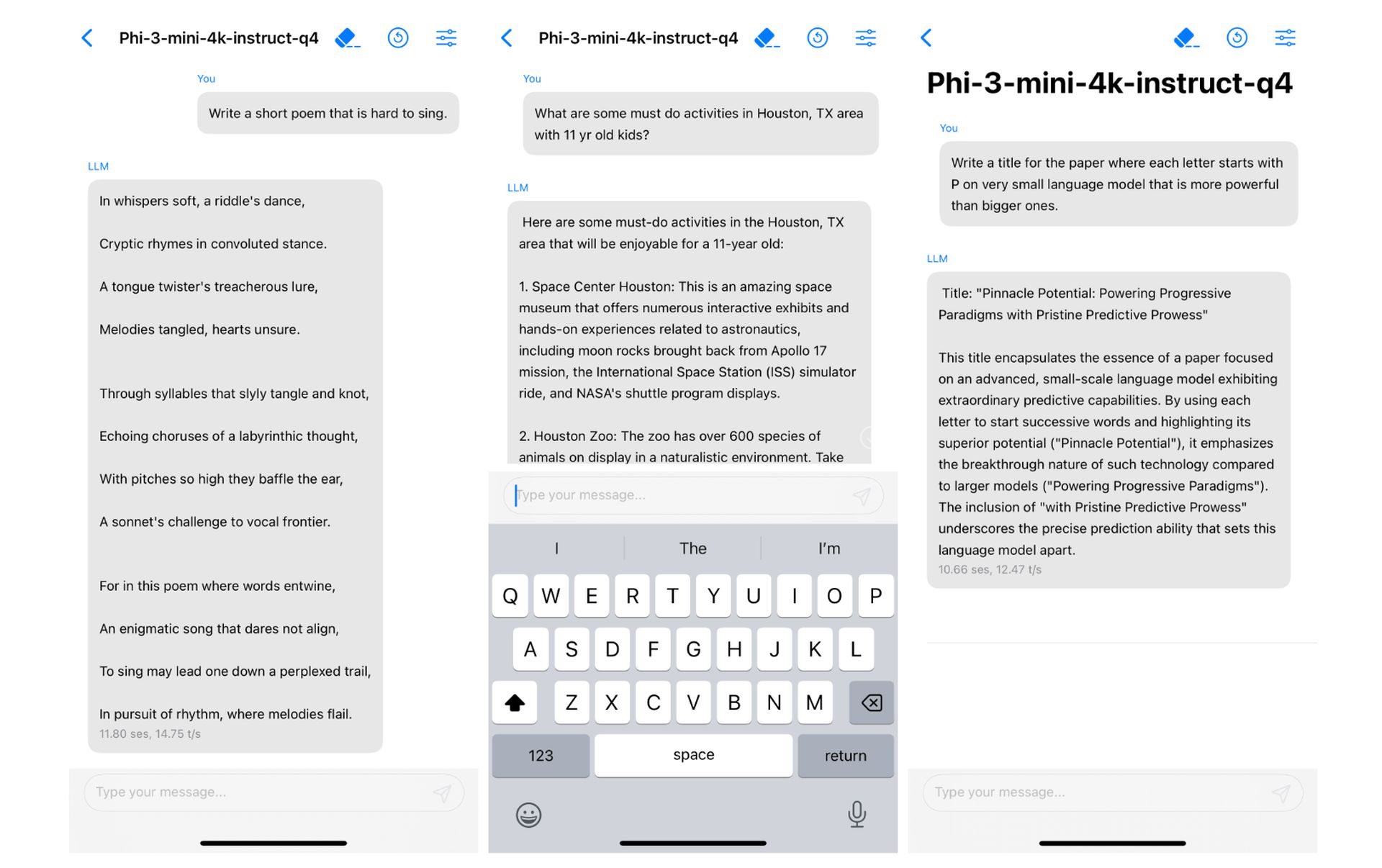

Efficient on-device performancePhi-3 Mini’s small size translates to exceptional on-device performance. By quantizing the model to 4 bits, it occupies a mere 1.8 gigabytes of memory.

A match made in heavenDo you remember Microsoft’s failed smartphone attempts? What about Apple’s failed Google Gemini integration deals in recent months? Or have you been following the news of Apple being vocal about integrating an on-device LLM with iOS 18 over the past few weeks?

Does it ring a bell?

The potential applications of Phi-3 Mini are vast. Its efficiency makes it ideal for integration into mobile devices, potentially enabling features like smarter virtual assistants and real-time language translation. Additionally, its cost-effectiveness could open doors for wider adoption by developers working on various AI-powered projects.

And that’s exactly what Apple was looking for. Of course, this claim is nothing more than a guess for now, but it wouldn’t be wrong to say that this is a “match made in heaven”. Besides, in the research paper, Microsoft’s new project has been already run on an iPhone with an A16 Bionic chip natively.

Speculation arises regarding potential collaboration between Microsoft and Apple, considering the Phi-3 Mini’s compatibility with iOS devices and Apple’s recent interest in on-device LLM integration (Image credit)

Speculation arises regarding potential collaboration between Microsoft and Apple, considering the Phi-3 Mini’s compatibility with iOS devices and Apple’s recent interest in on-device LLM integration (Image credit)

Phi-3 Mini’s success hinges on a combination of factors – a well-suited architecture, efficient use of context length, compatibility with existing tools, a focus on high-quality training data, and optimization techniques. This unique approach paves the way for powerful and efficient AI models that can operate seamlessly on personal devices.

Featured image credit: vecstock/Freepik