Llama 3 benchmark reveals how is Meta AI holding against ChatGPT and Gemini

While bringing the Meta AI update to every platform, Meta also published the Llama 3 benchmark for technology enthusiasts.

The benchmark offers independent researchers and developers a standardized testing suite to evaluate Llama 3’s performance on various tasks.

This transparency allows users to compare Llama 3’s strengths and weaknesses against other LLMs using the same benchmark, fostering a more objective understanding of its capabilities.

What does the Llama 3 benchmark show?Meta AI established the Llama 3 benchmark, a comprehensive suite of evaluations designed to assess LLM performance across various tasks. These tasks include question answering, summarization, following instructions, and few-shot learning. The benchmark serves as a crucial tool for gauging Llama 3’s strengths and weaknesses against other LLMs.

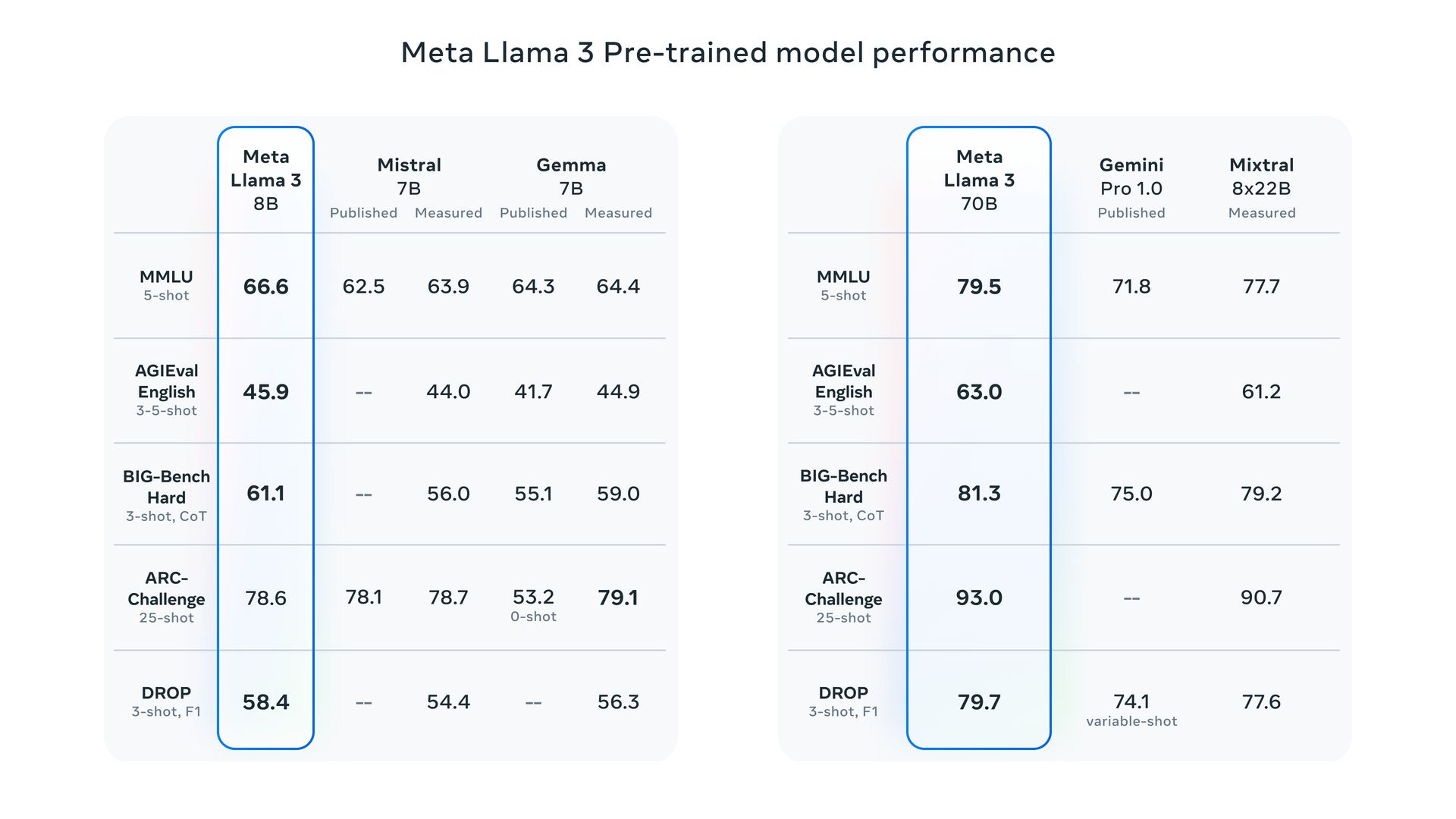

While a direct comparison between the Llama 3 benchmark and those used for competitors is challenging due to differing evaluation methodologies, Meta claims that Llama 3 models trained on their dataset achieved exceptional performance across all evaluated tasks. This indicates that Meta AI is on par with the best in the LLM field.

Here’s a deeper look at how Llama 3 benchmarks stack up:

- Parameter scale: Meta boasts that their 8B and 70B parameter Llama 3 models surpass Llama 2 and establish a new state-of-the-art for LLMs of similar scale.

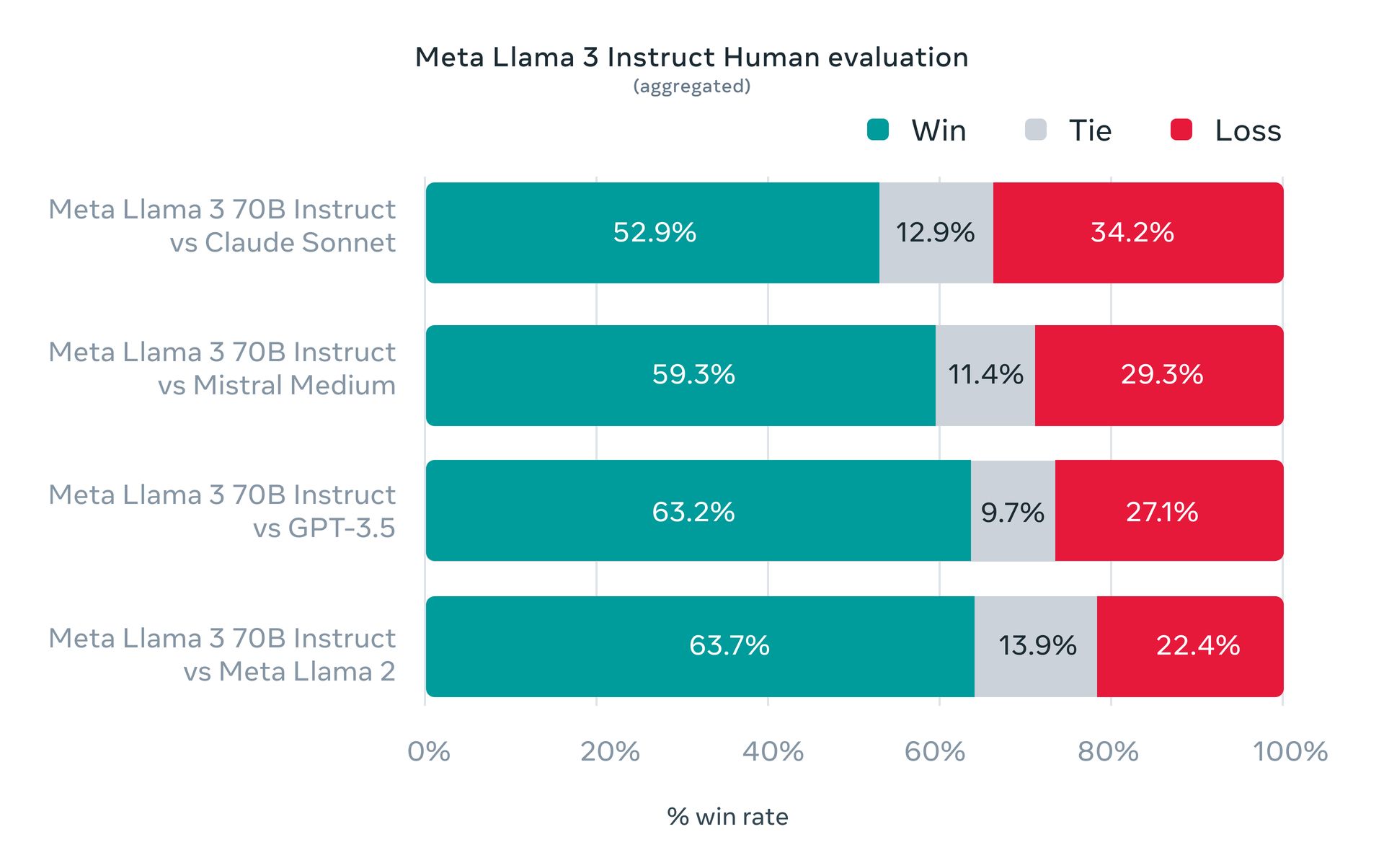

- Human evaluation: Meta conducted human evaluations on a comprehensive dataset encompassing 12 key use cases. This evaluation positions the 70B instruction-following Llama 3 model favorably against rivals of comparable size in real-world scenarios.

These are Meta’s own evaluations, and independent benchmarks might be necessary for a more definitive comparison.

Despite current benchmark limitations, Llama 3 showcased strong performance across various tasks (Image credit)

Open-weights vs open-source

Despite current benchmark limitations, Llama 3 showcased strong performance across various tasks (Image credit)

Open-weights vs open-source

It’s crucial to differentiate between “open-weights” and “open-source.” While Llama 3 offers freely downloadable models and weights, it doesn’t fall under the strict definition of open-source due to limitations on access and training data (unlike truly open-source software).

Llama 3 comes in two sizes: 8 billion (8B) and 70 billion (70B) parameters. Both are available for free download on Meta’s website after a simple sign-up process.

A technical deep dive into Meta AILlama 3 offers two versions:

- Pre-trained: This is the raw model focused on next-token prediction.

- Instruction-tuned: This version is fine-tuned to follow specific user instructions.

Both versions have a context limit of 8,192 tokens.

Llama 3 models, available in 8 billion (8B) and 70 billion (70B) parameters (Image credit)

Training details

Llama 3 models, available in 8 billion (8B) and 70 billion (70B) parameters (Image credit)

Training details

- Training hardware: Meta employed two custom-built clusters, each containing a staggering 24,000 GPUs, for training Llama 3.

- Training data: Mark Zuckerberg, Meta’s CEO, revealed in a podcast interview that the 70B model was trained on a massive dataset of around 15 trillion tokens. Interestingly, the model never reached a point of saturation (peak performance) during training, suggesting there might be room for further improvement with even larger datasets.

- Future plans: Meta is currently training a colossal 400B parameter version of Llama 3, potentially putting it in the same performance league as rivals like GPT-4 Turbo and Gemini Ultra on benchmarks like MMLU, GPQA, HumanEval, and MATH.

We need to acknowledge the limitations of current LLM benchmarks due to factors like training data contamination and cherry-picking of results by vendors.

Despite these limitations, Meta provided some benchmarks showcasing the performance of Llama 3 on tasks like MMLU (general knowledge), GSM-8K (math), HumanEval (coding), GPQA (advanced questions), and MATH (word problems).

These benchmarks position the 8B model favorably against open-weights competitors like Google’s Gemma 7B and Mistral 7B Instruct. The 70B model also holds its own against established names like Gemini Pro 1.5 and Claude 3 Sonnet.

Meta employed custom-built clusters containing 24,000 GPUs each for training Llama 3 (Image credit)

Accesibility of Llama 3

Meta employed custom-built clusters containing 24,000 GPUs each for training Llama 3 (Image credit)

Accesibility of Llama 3

Meta plans to make Llama 3 models available on major cloud platforms like AWS, Databricks, Google Cloud, and others, ensuring broad accessibility for developers.

Llama 3 forms the foundation of Meta’s virtual assistant, which will be prominently featured in search functionalities across Facebook, Instagram, WhatsApp, Messenger, and a dedicated website resembling ChatGPT’s interface (including image generation).

Additionally, Meta has partnered with Google to integrate real-time search results into the assistant, building upon their existing partnership with Microsoft’s Bing.

Featured image credit: Meta