Google unveils Infini-attention technique for super-sized conversations with language models

Large language models (LLMs) are computer programs that have been trained on massive amounts of text data. This allows them to generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

However, LLMs have a limitation: they can only handle a specific amount of information at a time. This is like having a conversation with someone who can only remember the last few sentences you said.

Google researchers have developed a new technique called Infini-attention that allows LLMs to hold onto and use much more information during a conversation. This means you can provide way more context to your questions and get more comprehensive answers. Imagine asking an LLM to summarize a complex historical event, or write a fictional story that builds on a detailed backstory you provide. With Infini-attention, these kinds of interactions become possible.

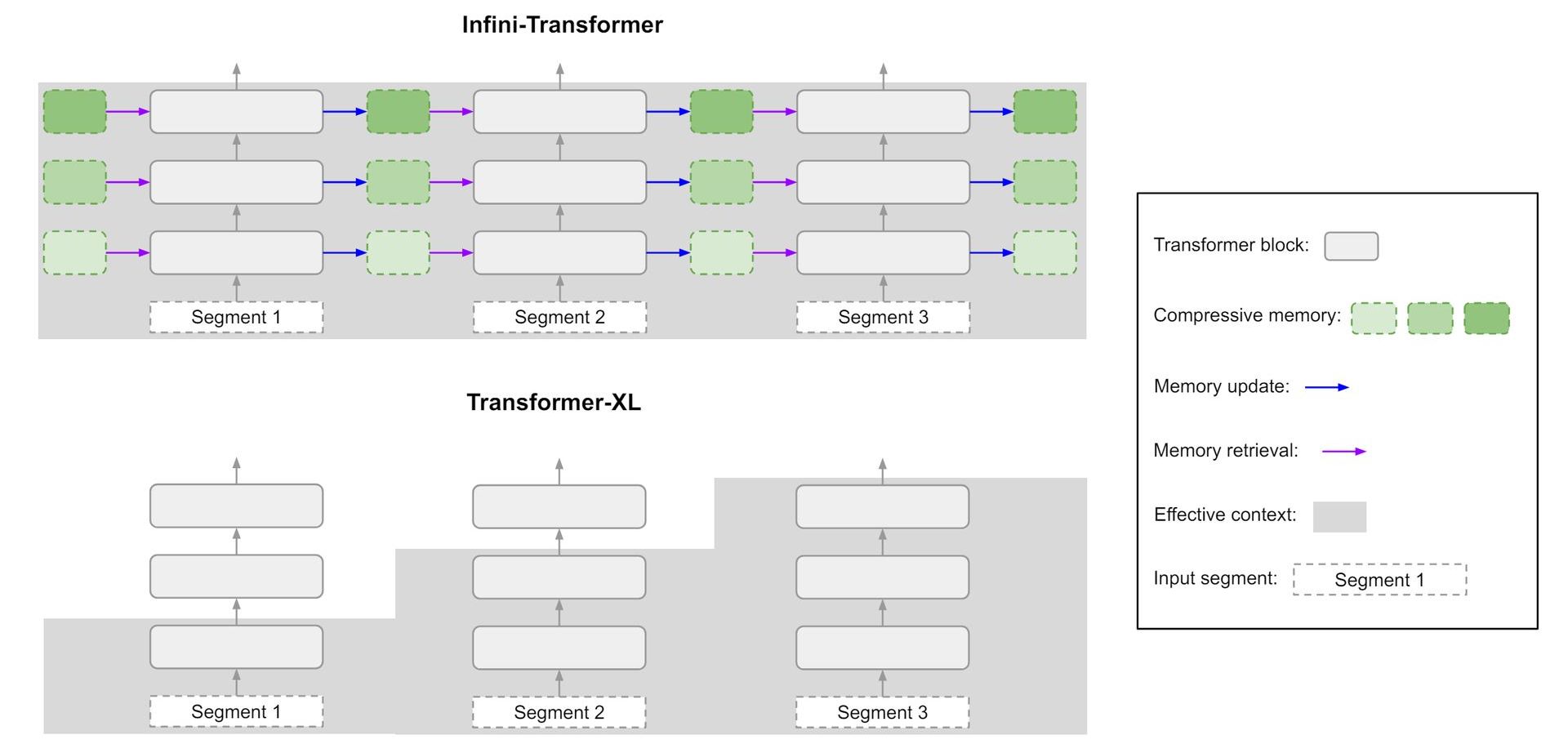

How does Infini-attention work?Traditional LLMs process information in fixed-size chunks, focusing on the current chunk and discarding or compressing older ones. This approach hinders their ability to capture long-range dependencies and retain contextual information crucial for tasks requiring broader understanding.

Infini-attention improves upon conventional LLMs by breaking down input into manageable chunks and employing a sophisticated attention mechanism (Image credit)

Infini-attention improves upon conventional LLMs by breaking down input into manageable chunks and employing a sophisticated attention mechanism (Image credit)

Infini-attention addresses this limitation by enabling LLMs to effectively utilize information from past interactions. It achieves this through a combination of existing attention mechanisms and efficient memory management techniques.

Here’s a breakdown of its core functionalities:

Chunking and attentionSimilar to traditional LLMs, Infini-attention first divides the input sequence into smaller segments. During processing, the model employs an attention mechanism to identify the most relevant parts within each chunk for the current task. This attention mechanism assigns weights to different elements within the chunk, indicating their significance to the current context.

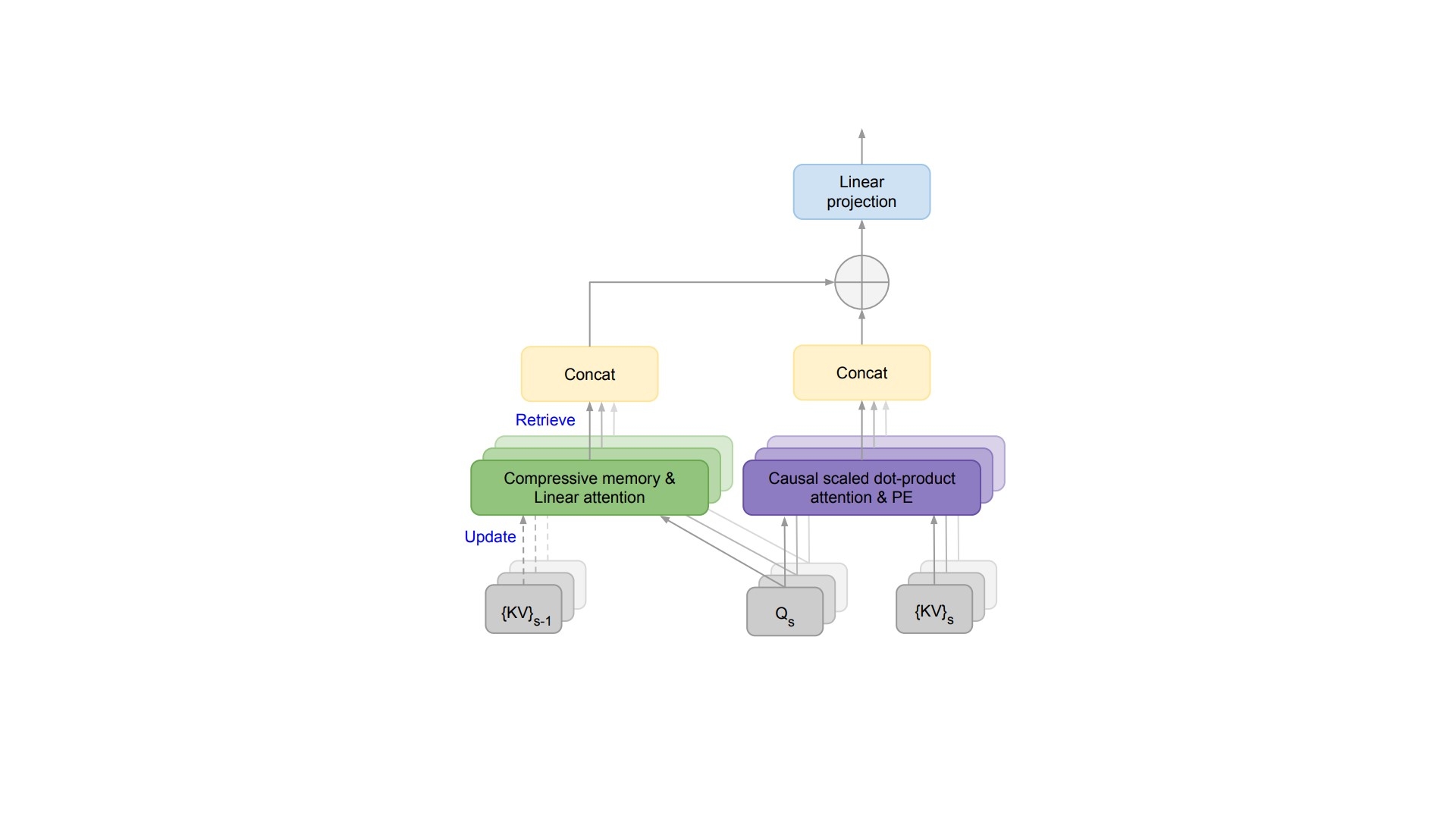

Memory creationUnlike traditional models that discard or heavily compress past chunks, Infini-attention leverages the attention weights to create a compressed memory representation. This compressed representation prioritizes information deemed important by the attention mechanism, capturing the essence of the processed chunk. Essentially, it extracts a salient summary of the processed information.

Memory storageThese compressed memory representations are then stored in a dedicated memory space within the LLM. This memory space is designed for efficient retrieval and utilization. While the specific implementation details haven’t been publicly disclosed, it’s likely to involve techniques like key-value stores or hierarchical structures that facilitate efficient access based on relevance.

Google Infini-attention technique compresses important data into a memory representation that captures the essence of previous interactions, which is stored efficiently for future use (Image credit)

Contextual integration

Google Infini-attention technique compresses important data into a memory representation that captures the essence of previous interactions, which is stored efficiently for future use (Image credit)

Contextual integration

When processing new information, the LLM doesn’t solely rely on the current chunk. It can now retrieve relevant compressed memories from the dedicated storage space. This retrieval process leverages the attention weights associated with the compressed memories, ensuring the retrieved information aligns with the current context. By integrating information from both the current chunk and relevant past contexts, the LLM gains a more comprehensive understanding of the overall input sequence.

So, Infini-attention equips LLMs with the ability to consider a broader range of information during interactions. This enhanced contextual awareness can lead to several potential benefits:

- By incorporating a wider range of relevant information, LLMs can generate more accurate and informative responses to complex queries.

- Infini-attention allows LLMs to follow complex arguments by remembering past elements of the interaction. This enables them to perform tasks requiring logical reasoning and inference.

- With access to a broader context, LLMs can generate more creative and coherent text formats like scripts, poems, or emails that are tailored to the specific situation.

Imagine you’re having a conversation with a friend. They start by mentioning a movie they just saw, then launch into a detailed explanation of a new recipe they’re trying, before circling back to the movie to analyze a specific plot point. While this might sound like a normal conversation, for AI chatbots, it can be a recipe for confusion.

Recent advancements like Google’s Infini-attention are enabling chatbots to hold onto and utilize more information during interactions. This sounds great on paper – more context means the AI can understand us better, right? Well, not exactly. Just like that friend who can’t stay on topic, overloading an AI with context can have some surprising drawbacks.

What exactly is an AI hallucination and why does it matter?

Let’s face it, information overload is a real problem, and AI chatbots aren’t immune. Drowning them in irrelevant details can make it difficult for them to identify the key points and formulate a coherent response. Think of it like trying to find a specific recipe in a cookbook filled with grocery lists and restaurant reviews – not exactly efficient.

There’s also the computational cost to consider. Storing and processing a vast amount of context requires serious muscle. This can slow down the chatbot and make it less user-friendly. Imagine waiting minutes for a response because the AI is busy untangling a web of irrelevant information. Not exactly the seamless interaction we’re hoping for.

So, where’s the sweet spot?The truth is, that the ideal context size depends entirely on the situation. For complex tasks like question answering or creative writing, a broader context can be helpful. But for simpler interactions, it can just be clutter.

The key is finding a balance – providing enough context for the AI to understand the situation, but not so much that it gets lost in the weeds. Think of it like setting the scene for a play – you want to provide enough details to set the stage, but not bog down the audience with unnecessary backstory.

As AI chatbots continue to evolve, understanding the delicate dance between context and clarity will be crucial. By striking the right balance, we can ensure these AI companions are not just walking encyclopedias, but intelligent and engaging conversation partners.

Featured image credit: Freepik