THE FUTURE IS NOW: EUROPEAN PARLIAMENT’S LANDMARK APPROVAL OF THE ARTIFICIAL INTELLIGENCE ACT

With the EU’s latest AI Act, a revolutionary era in tech governance begins! This landmark legislation redefines AI’s global landscape, proposing a risk-based approach for AI systems. It’s a big move, which paves the way for responsible AI usage and potentially establishes a new global norm. The AI Act isn’t just about regulation — it’s about shaping a safer, more ethical future in artificial intelligence.

Illustration D&A Partners, 2024 ©

Illustration D&A Partners, 2024 ©In December 2023, the EU Artificial Intelligence Act (AI Act) passed all the necessary administrative procedures and political approvals, and on the 13th of March 2024, it was endorsed by the vast majority of Members of the European Parliament: 523 votes in favour, 46 against and 49 abstentions. The AI Act will enter into force twenty days after its publication; however, it will be applied in parts: companies will have from 6 to 36 months to comply with the regulation.

The AI Act is an unconditional breakthrough in the regulation of innovations and new technologies related to artificial intelligence (AI). It is the first law in history, which fully regulates artificial intelligence systems, so it is of great importance not only for the EU but also for the whole world. The AI Act regulates the development and use of AI and foresees significant fines and a wide range of obligatory requirements for companies engaged in the development and implementation of AI. It is noteworthy that the AI Act will apply not only to the European companies developing and implementing AI but also to the companies outside the EU if their AI systems are used in Europe. A special entity is being established for the implementation of the AI Act — The European Artificial Intelligence Office — which will enforce compliance with the mandatory rules for the creation and use of general-purpose artificial intelligence models (GPAI).

As per the latest iteration of the AI Act:

“General-purpose AI model (GPAI) means an AI model including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications, except AI models that are used for research, development or prototyping activities before they are released on the market”.The most famous example of the GPAI is ChatGPT. This also includes text and image generation systems such as LLaMa from Meta and Midjourney.

According to a press release on the website of the European Parliament, the purpose of regulation is to:

“protect fundamental rights, democracy, the rule of law and environmental sustainability from high-risk AI, while boosting innovation and establishing Europe as a leader in the field”.The AI Act is part of a large-scale digital EU strategy which aims to ensure favourable conditions for the development of innovative technology.

APPROACH TO AI REGULATION

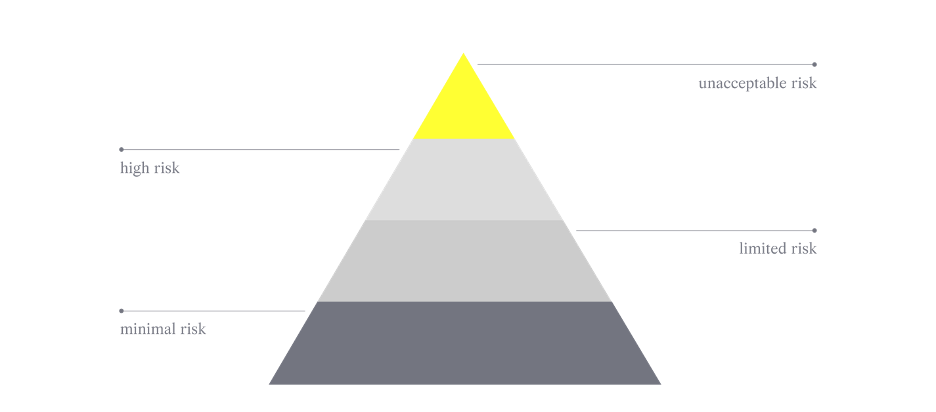

The EU has chosen a risk-based approach to the legal regulation of AI. In practice, this means that the AI Act ranks AI systems according to their risk and introduces special regulations for each group: from the strictest rules for high-risk systems to complete freedom for AI systems that do not pose a threat. This approach to regulation should be considered optimal for a rapidly developing industry because, on the one hand, it allows to exclude uncontrolled and dangerous use of artificial intelligence, and, on the other hand, it does not reduce the initiative of businesses to invest in and develop artificial intelligence.

SYSTEM OF RISKS

The AI Act classifies AI systems under 4 categories of risks:

Illustration D&A Partners, 2024 ©

Illustration D&A Partners, 2024 ©Unacceptable risk

This group includes, for example, biometric identification and categorization of people, inferring human emotions in the workplace or social scoring systems.

High risk

This group includes a wide variety of AI systems. For example, AI is used for the operation of infrastructure (in particular road traffic), where such use could jeopardize the life and health of citizens. Another example of a high-risk system is an AI system used in education, which could affect access to education, for example, through exam grading. Moreover, AI used to evaluate CVs in job selection also falls under the category of high-risk systems.

In addition, AI used in the healthcare industry can also be considered high-risk. Thus, AI used in robot-assisted surgeries, AI used for in vitro diagnostic medical tests (like COVID-19 tests), or medical devices (like diagnostic, preventive or predictive tools to detect diseases) are also examples of potentially high-risk AI.

Most of the AI Act is devoted to the regulation of this particular category of AI systems.

Limited risk

The AI Act introduces specific requirements to ensure transparency of limited-risk AI systems. Thus, the providers and deployers must inform end-users about their interactions with AI. For example, for the use of chatbots, it will now be required to disclose that a person is interacting with a machine. AI-generated content must be appropriately labelled as artificially generated. The same requirement applies to audio and video content created using deep fakes.

Minimal or no risk

Finally, the AI Act allows the unrestricted use of minimal-risk AI. This includes, for example, AI-enabled video games or spam filters. The European Commission also highlights that the vast majority of AI systems currently used in the EU fall into this category.

THE ESSENCE OF REGULATION

To implement a risk-based approach, the AI Act provides the following rules:

- Prohibits AI systems that pose unacceptable risks. Such systems may be authorized only in exceptional cases, for law enforcement purposes by court order (e.g., real-time facial recognition to find a missing child).

- Defines a list of high-risk AI systems and establishes clear requirements for such systems and companies that develop and implement them. Among the requirements are, for example, mandatory risk assessment and compliance; high-quality data sets on which AI is trained; automatic record-keeping of events over the system’s lifetime; reasonable human oversight of such AI systems and other risk-mitigation measures.

- Requires compliance assessment before a high-risk AI system is commissioned or launched on the market.

- Specifies transparency requirements for limited-risk AI systems.

- Completely exempts low-risk AI from regulation.

Additionally, the AI Act highlights the responsibilities of GPAI providers:

- All providers of GPAI models must draw up technical documentation, and instructions for using the models, comply with copyright laws, and make publicly available a detailed summary of the content that was used to train the AI.

- All providers of GPAI models that pose a systemic risk — open or closed — must also perform model evaluations, track and report serious incidents of AI model performance, and ensure cybersecurity protections.

LIABILITY

Large fines of up to €35 million or 7% of global turnover are established for non-compliance with the provisions of the AI Act.

How should businesses proceed?

As soon as the law comes into force, all “funny” stories about the incorrect operation of artificial intelligence will become a legal problem. A special regulatory burden will fall on those companies that develop and implement high-risk AI systems. However, regardless of the risk category, it is now feasible for all companies working with artificial intelligence to implement AI Compliance.

AI Compliance is an effective internal oversight to ensure that AI-systems, the process of their development, implementation and use adhere to legal requirements, as well as ethical standards.

What should AI compliance include?

- Regulatory compliance assessment and guarantee compliance with laws during the development, implementation and use of AI systems. This includes compliance not only with AI laws but also with GDPR (EU General Data Protection Regulation) and copyright regulations.

- Ethical standards. Companies, as well as individual users of AI, have to keep in mind that many issues of AI usage remain outside the scope of legal regulations. It is important that AI systems comply not only with the laws but also with ethical principles such as fairness, transparency, accountability and respect for user privacy. Ethical compliance includes identifying biases in AI systems, avoiding privacy violations and minimizing other ethical risks.

Implementation of AI compliance is highly important for ensuring trust in AI technologies, protecting human rights, and incentivizing responsible innovations. Realising the importance of companies adapting to the new rules of the AI Act, the European Commission has developed the AI Pact to encourage and support companies willing to voluntarily comply with the requirements of the AI Act before they become legally binding. The Pact provides opportunities to interact with the Commission and other authorities, share best practices and conduct awareness-raising activities. This should help companies to adapt to the new regulations in advance.

Illustration D&A Partners, 2024 ©

Illustration D&A Partners, 2024 ©CONCLUSION

The whole world has been watching the development of the regulation of artificial intelligence in the EU. It is likely that the adopted AI Act will become kind of a “gold standard” for the vast majority of jurisdictions, just as the GDPR once became such a standard. In the upcoming years we will see whether advanced regulation will meet the challenges of the market. However, we can already state with certainty that, given the speed of development of AI technologies, legislative regulation alone will definitely not be enough to ensure the safe use of AI systems. Humanity will have to realise the importance of ethical self-restraint and conscious use of AI. Let’s see if it works for us!

Catherine Smirnova

Catherine Smirnova Aleksandra IugunianThis article was written by Catherine Smirnova & Aleksandra Iugunian of Digital & Analogue Partners. Visit dna.partners to learn more about our team and the services.Be digital, be analogue, be with us!

Aleksandra IugunianThis article was written by Catherine Smirnova & Aleksandra Iugunian of Digital & Analogue Partners. Visit dna.partners to learn more about our team and the services.Be digital, be analogue, be with us!THE FUTURE IS NOW: EUROPEAN PARLIAMENT’S LANDMARK APPROVAL OF THE ARTIFICIAL INTELLIGENCE ACT was originally published in Coinmonks on Medium, where people are continuing the conversation by highlighting and responding to this story.